wsyntax technologies

How a Norco case killed 13TB of our data

Short version: Norco cases cannot handle 3TB drives due to high drive current and shitty engineering. They may kill any drive plugged into the server, after any period of time. AVOID NORCO HOTSWAP CASES.

Comments also on Hacker News.

Background

At Dabney House, we have a medium-sized fileserver where people can store backups, videos, pictures, etc. We’re on a shoestring budget, being run by students for students, but we set up the system using large amounts of redundancy so a single hardware failure wouldn’t take us down [1].

As a result, we bought good servers and host adapters, but skimped on the easiest part: the case. If a computer runs fine splayed out on the test bench, it should work fine in any metal box, right?

Problems

We added a new server and a new set of 3TB drives in the beginning of October. When we assembled the server and slotted in the drives, we were greeted with this surprise:

The backplanes on the new server exploded. We took out the boards to get a closer look at the problem, and with Google’s help, found other people who had similar issues:

I cant believe how much trouble I have had with my norco 4224

“We’ll just return the case, cheap hardware fails all the time, probably bad QC.” So we thought.

In the meantime, we assembled the motherboard and drives by themselves, and hooked it up to the network so we could take advantage of the new space without waiting on a replacement.

Continuing Failure

As we re-distributed data onto the new server, one of our old servers suddenly started failing. It had been experiencing some problems previously (freezing and coming back a couple hours later), but we chalked that up to scaling issues with the diskless boot.

Until, suddenly, this server wouldn’t usually get past the drive identification screen. And if it did, all of the drives wouldn’t show up. Of 16, we’d get 9, never all of them.

We opened up the case and found similar problems as in the new server: exploded chips. Yet, this server had been working fine up until now; we didn’t even touch it!

What happened?

We swapped out hardware until we found the culprit: the newer drives, 3TB drives, caused serious problems. Every time they were plugged into a Norco case, the result was fire or smoke.

This was tested to exhaustion: by plugging in one of our new 3TB drives into any case, we could cause that port to die. We killed at least 8 ports on different backplanes by plugging in a single drive. All of our Norco cases seemed to show the same problem: an inability to deal with 3TB hard drives.

We were left with piles of dead chips, which died suddenly all for the same reason.

Current

A little bit of specification searching brought us to the ugly factor: the new drives drew 0.75A from both the +5V and +12V supplies. The old drives only required 0.6A, or maybe even 0.5A.

The drives weren’t defective. The SATA standard allows for at least an ampere drawn from each supply.

Hot-swap Power Considerations

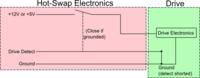

A little safety feature that most hot-swap bays implement is a MOSFET hooked to the +5V/+12V rails. Essentially, the MOSFET chip acts as a switch: when a ground pin successfully contacts, the switch is turned on.

This prevents a scenario where the drive receives power before ground, something that’s usually lethal to electronics. (The +12V supply tries to return its current through the +5V supply and associated circuits, which kills them pretty quickly)

This is a conservative design unless the MOSFET can’t handle the current, which is clearly what we were seeing. Norco claims static does the damage, but it’s unlikely this can account for multiple backplanes failing for the same reason. Our 2TB drives were rated to draw 0.5A on +5/+12V, while the 3TB drives were rated for 0.75A, also on both rails.

Unfortunately, Norco knows about this issue. In our newest case, more MOSFETs are paralleled to handle more current. It’s still not enough: the chips they’re using simply can’t stand the current due to shitty manufacturing. (Their datasheet (original source) rates them for 6A per FET, more than enough under standard conditions!)

From MOSFETs to Dead Drives

Unfortunately, when MOSFETs fail, bad side-effect occur. For 6 of our drives, this means that the +5 and +12V rails were momentarily connected together, causing their electronics to die.

This happened to 6 of our drives. 2 of them were on the same RAIDZ set; I was able to recover one via swapping drive electronics.

The other four drives are not recognized by the host adapter, even after swapping drive electronics with the same firmware and model number.

That’s 13TB of our data gone, dead [2]. Thanks, Norco.

Quick Workaround

Despite the fact that we had these failures, we still had to get our servers running. The answer is to simply take off the blown chips and solder a wire across, turning the power supplies “always on” for the drives.

Lucklily, the way the SATA power connector is designed makes it hard for power-before-ground scenarios to occur. We’re planning on replacing the Norco cases as soon as we get a little more budget. (You should find a qualified EE to do this replacement; they should be able to follow the power traces an pinouts to make the right solder bridge)

Summary

Don’t use cheap hardware. It will fail in ways you thought impossible when you least expect it.

If you find yourself in this situation, and no budget left to switch drives, ask your local EE to help you solder across source/drain of the hot-swap FETs.

While Norco seems to be Supermicro’s biggest competitor in the server chassis market, their engineering is an order of magnitude worse. Under-spec parts and shoddy hacks (placing multiple faulty MOSFETs in parallel is not a solution!) abound. If you thought you could trust the metal box, perhaps, but any circuit design Norco lays its hands on is doomed to failure.

To Norco: Your hardware is terribly designed and assembled. Nearly 15% of our data is inaccessible due to your incompetence. Don’t expect us to be coming back.

If anything, you should be paying to recover our data. It’s embarrassing that you’ve messed up a simple hot-swap power circuit.

Notes

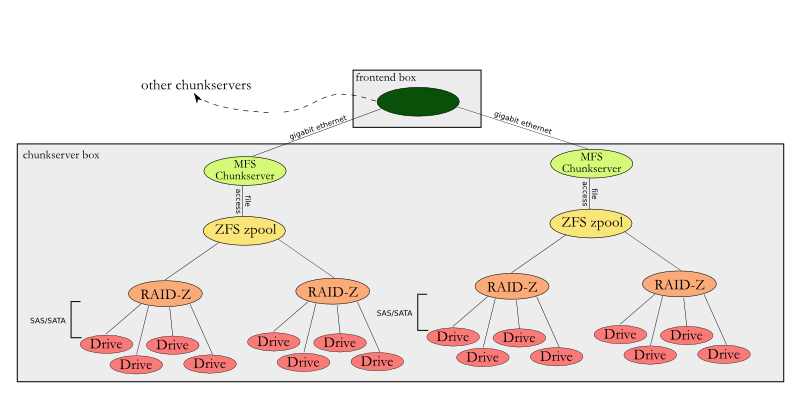

[1]: The full system diagram looks like:

ZFS protects us from drive failures with RAID-Z. MooseFS protects us from server failures: if our server hardware fails, we plug the drives into a desktop and reattach to the network. No data loss, and there’s always some way to get back online without expensive custom parts.

[2]: We can likely recover the data if… we submit our drives to a data recovery facility (the platters are undamaged). However, we don’t have money for that, being only a small dorm. Nominally, it’s gone forever.